Installation

Install it as a “dev” dependency:

npm i -D perf-ref

Import it in the code:

import { opsPerSec, perfRef, version } from "perf-ref";

Problem

Conceptually, benchmark was built to compare two programs running on the same computer.

It’s pointless to use benchmark on a single program — by itself, “X ops/s on machine Y” result is useless because that particular computer is different from all other computers in the world.

We (Codsen, that is I, Roy) care about our program performance, so we want to track it, we keep historic records and compare the performance scores of different versions:

Very quickly we discovered that benchmarks ran on CI differ from same program benchmarks ran on a local machine. Obviously, CI provider will have a different CPU/memory/etc combination! Also, historical scores gets skewed when we upgrade the work computer.

// historical.json

{

"4.0.5": 157818.66049426925,

"4.0.7": 218346.7573924499,

"4.0.8": 133118.9452751072,

"4.0.9": 89864.2857142975, // <-- 😱 Oops, ran on CI

"4.0.10": 155106.4128445344,

"4.0.11": 149272.44273876454,

"4.0.12": 377256.08705478627, // <-- 😱 3x increase! Or is it the new laptop?

"4.1.0": 372294.78720313296, // <-- phew, laptop it seems...

"4.1.1": 401598.55677969076, // <-- yep, it was laptop

"lastVersion": 1141598.55677969076 // <-- 🤷

}

So, how do we solve this problem? How can we make perf benchmark scores portable, like GeekBench for example? Except instead of GeekBench secret mock program, it’s your program?

Solution

We need to normalise the scores. We need:

- an étalon, a standard program of measurement — that’s

perfRef()function this package exports. - we need any arbitrary performance score — that’s

opsPerSec— which was pinned to a benchmark ofperfRef()on M1 Mac Mini.

Now, to calculate an absolute performance score of your program, you’d:

- run

benchmarkon our étalonperfRef(), then record the score (1) - run

benchmarkon your program, then record the score (2) - proportionally increase or decrease the (2) as much as (1) differs from

opsPerSec

This way, we take your computer’s performance out of equation and proportionally increase or decrease your program’s benchmark accordingly to how quick your computer was able to run the étalon perfRef().

API — perfRef()

The function perfRef() is imported like this:

import { perfRef } from "perf-ref";

It’s a CPU-bound function which makes many meaningless calculations and uses as many different JS methods as possible.

It is set in stone and we will aim to never update or change it in any way.

API — opsPerSec

The static value opsPerSec is imported like this:

import { opsPerSec } from "perf-ref";

console.log(opsPerSec);

// => 182

It is an arbitrary forever-frozen score. It means, M1 Mac Mini ran 182 runs/s of perfRef() once upon a time (statistically significant result, of course). The faster/slower you benchmark the perfRef() on your computer, the more proportionally you adjust your benchmark score.

Value is set in stone and we will aim to never update or change it in any way. That’s why the program sits outside the monorepo on our GitHub account.

API — version

You can import version:

import { version } from "perf-ref";

console.log(version);

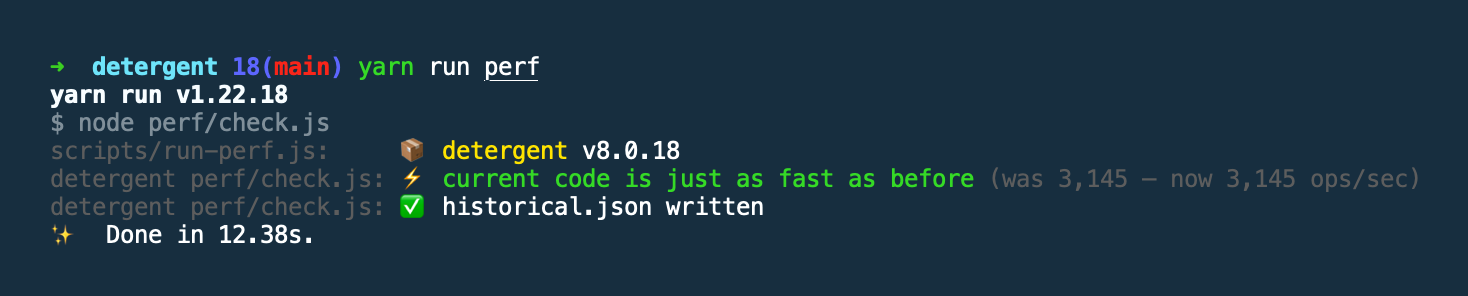

Example

See codsen monorepo benchmark script which produces the historical benchmark records for each package, such as this. Also, see our runner script.