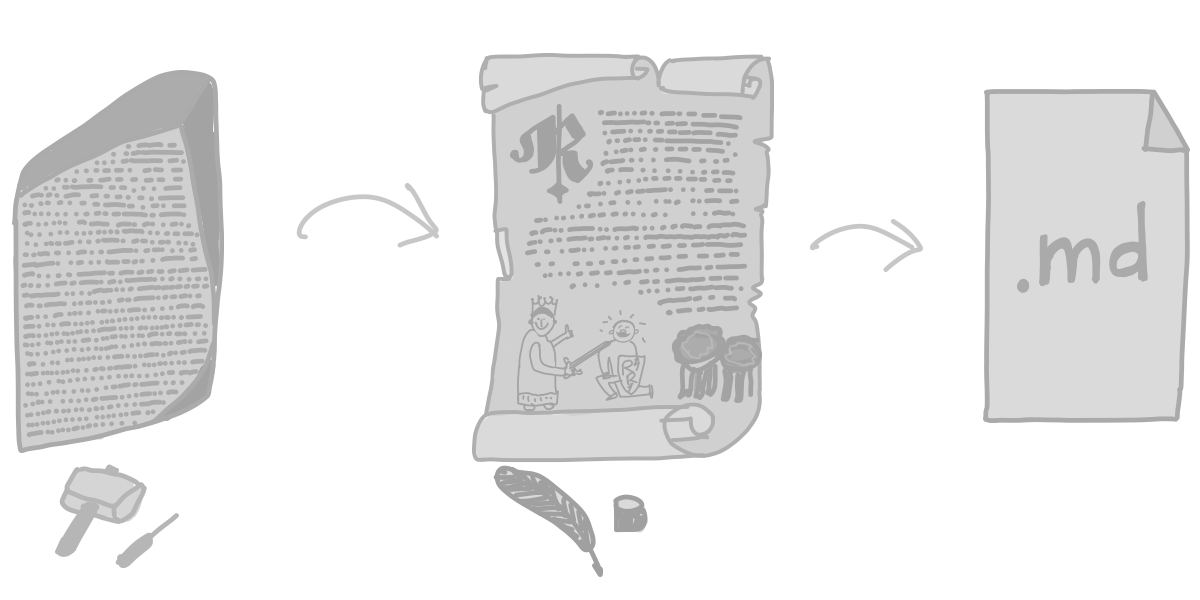

Evolution of our npm Packages, from a README perspective

by Roy Revelt · posted on · monorepoindieweb

As our npm package count grows, the README automation becomes more and more an issue. Installation instructions, badges, contribution guidelines can be automatically generated, but many other chapters can’t.

Here’s our story from a README perspective.

The Beginning

At first, we had a handful of npm packages, a folder with a few subfolders, and didn’t manage anything at all: each npm package had its own, manually written README.md.

The newer the edit date of the README was, the nicer it was as we were experimenting and adding more features like badges. There was lots of copy-pasting and a risk that old bits from old README could remain and end up published to the new README.

Level 2 — np

Later we discovered a CLI helper np from Mr Sorhus — the idea is genius: you hack away on a package, then release time comes, you trigger np, it wipes node_modules and installs fresh and runs all tests, then if all pass, np asks you the next version, releases to npm and pushes to the git remote.

Level 3 — let’s loop np

By this time we had around 20 packages, but we didn’t release that often. The releasing was manual, per-package or folder, so we thought — what if we could loop over folders and call the np inside each one?

We did that.

All README’s still manually-written though.

Level 4 — lect

At one moment, it popped to our mind that, actually, we can generate fresh and replace specific chapters in README, then put this on a script and run it often.

For that, we created a CLI lect. There is only one config in the /packages/ root, plus each package.json can have their own, per-package settings.

This approach is different from Mr Schlinkert’s verb which keeps README contents in a separate file, .verb.md and renders that into README.md each time.

With time we added more and more automation features to lect, automating the maintenance of auxiliary files: .npmignore, .npmrc, .prettierignore, LICENSE, even rollup.config.js.

For example, glance at email-comb Rollup config. It’s generated.

Level 5 — monorepo, commitizen

Conceptually, Lerna does three things for us:

- Bootstraping dependencies — dedupe and “lift” them from package folders to the root [2022 edit: that is done automatically these days by npm/yarn workspaces]

- while integrating with

commitizen, when release time comes, fill CHANGELOG files (completely automating them) and bump theversionin package.json. Once you have all versions bumped, you’re a king — you can loop over all folders callingnpm publishinside each — and npm won’t let you publish packages without bumped versions — only Lerna-bumped packages will get published. lerna runis an excellent async script runner. [2022 edit: replaced with Turbo]

We’re pretty sure we’re using it in strange ways, but it does solve our technical challenges. Namely:

- Cohesion within an ecosystem of packages. In other words, if you code up a new feature on one package, how do you check, did you break anything up and down-stream dependency-wise? Manually, it’s tedious: either symlink or copy-paste builds into

node_modulesof another package, run its tests, then restore things as they were. In a monorepo, that’s automated. Plus, we canlerna run test— runtestscript inside each monorepo package. Think Mr Bahmutov’s dont-break but within monorepo boundaries and automated. node_modulesduplication and sheer gluttony. If not in monorepo, each package is a standalone folder with itsnode_moduleswhichnpwipes and installs again. We calculated there were gigabytes of dependencies wiped and downloaded each time — laptop being pretty much unusable, burning fans for the duration of whole release (an hour or so).

At the moment of writing, our 115 packages monorepo root node_modules has 1,107 dependency folders, weighing only 191 MB. That’s a tiny size by node_modules standards.

For the record, Lerna has warts too, for example, we couldn’t use lerna add for what we wrote our own linker CLI, lerna-link-dep. Nor we could use lerna publish. We go as far as lerna version, and from there on, we use other means. We also use our own package updater CLI. That deserves a separate post.

Level 6 — Releases through CI only

Open Source npm package monorepos can benefit from a CI same like commercial web applications: linting, building, unit testing benefits from being ran on a computer, separate from the local dev environment.

See our current CI config yml script here.

Moving npm package publishing to CI is a very wise choice, although there are very few people that share their CI configs or talk about their CI setups. We had to assemble our CI script from scratch, and it took a couple of weeks to nail it.

If you remember Mr Lindesay’s is-promise story — one of the outcomes was that releasing was moved to a CI.

Level 7 — own website

From Indieweb principles:

“own your data, scratch your itches, build tools for yourself, selfdogfood, document your stuff, open source your stuff, UX design is more important than protocols, visible data for humans first and machines second, platform-agnostic platforms, plurality over monoculture, longevity, and remember to have fun!” -Indieweb principles, https://indieweb.org/principles

Moving all the npm package README files to an owned website makes sense.

README updates won’t warrant package releases any more.

Website and programs monorepo are separate git repositories, so their issue trackers are also separate. It allows treating documentation amends properly — from issue templates to meaningful CI checks — separating the concerns.

People from outside can raise tickets and help you to update README, and that won’t affect programs’ source code.

There are SAAS companies out there which “quality assess” open-source npm packages, are they “suitable for corporate” or not. I was shocked to find out that excessive (by their standards) patch releasing is deemed to be a sign of low code quality. Oops! Our effort seeking README perfection is apparently harmful!

Another argument to port README’s outside npm/git provider is UX and features in general. Your website can outdo npm or GitHub/GitLab/BitBucket easily:

- Proper dark mode, with manual and auto settings

- Full control over images, their caching

- Complete control over markdown rendering. Did you know, Eleventy

markdown-itcan have plugins and at the moment there are 388 available? Are you sure your README markdown’s in npm/Github/GitLab/Bitbucket walled gardens receive the best possible treatment? - Heading anchors, TOC’s. Smooth scroll anybody?

- Interactive charts

- Code playgrounds, embedded as iframes, for example, Runkit (not to mention usual CSS playgrounds like JSBin or Codepen)

- Improved page layouts

- Cross-selling and up-selling — for example, cross-linking the hell out of articles and packages and tags.

- Banners, if you wish

Owning data and hosting it on your terms gives fantastic opportunities!

Level 8 — TypeScript, MDX and tsd-extract

As we migrated all our npm packages to TypeScript, we got access to type definitions file, types.d.ts.

I wrote a tiny package tsd-extract which can query .d.ts file as string and extract any declaration, by name, and return a string. No parsing, just a familiar scanerless parser algorithm, the same approach perfected in email-comb, html-crush and edit-package-json.

As a result, I can be sure that any code block in readme is always up-to-date.

Conceptually, before we run Remix build remix dev, we run a script which reads (fs.fileReadSync()) all .d.ts files and compiles them into a .ts file which exports a plain object lump of all the data. Then, Remix reads that file and bundles it up. Development builds trigger the compilation, production builds don’t. This way we save pennies on CI bill, because CI builds production build only. I’m in progress of migrating to remix loaders so that static files are bundled for server-only, and client gets pre-filtered, subset of a data.